I decided to start a small series of post related to one of my recent MVVM projects and the issues I faced and the solutions I found while working on this project.

First let me give a little bit of history and an overview.

I work at Biomedical Engineering Center and about year and a half ago our company put a freeze on spending as a result multiple project were shelved. This project, let’s call it SHMMP Manager, was supposed to be developed by an outside vendor, which we already picked and provided with functional specifications. After the freeze we had to scramble and bail out of the contract. Likely the vendor was very accommodative even though they were loosing a big chunk of money.

The decision was made to develop application internally, but only after all the issues with the current application were resolved. Current (now previous) application was developed 10 years ago in Access VBA with SQL Server back end. So it took several month to close outstanding issues and in October of 2009 we began planning and requirements gathering. Even though we had most of the specs developed we felt it would be wise to revisit the document once again and make any changes if necessary.

A month after talking to subject matter experts I decided the document was sufficiently complete and started working on designing the application’s architecture. Several month into the project I realized that my interrogation of subject matter experts was not thorough enough and had to make multiple adjustments to the application’s design.

Here is a high level overview of application features:

- Automatic PDF web reports generation

- Flexible permissions manager for report users

- Document imaging, context search and retrieval

- Flexible error validations

- Robust billing

- Full auditing

- Automation of business processes which were not automated in the current application.

- Better data mining.

After requirements, functional specs and database design the next step was to develop data migration module. This module was suppose to convert old data to new structure. While this sounds simple it didn’t turn out to be. Old data was dirty, not normalized and had completely different structure. So this step turned into a major cleaning project with multiple corrections to database design. Most of the changes were related to improving enforcement of data integrity. Additional steps were taking to split database into 3 parts:

- online processing (tuned for making changes INSERTs and UPDATEs)

- analytical processing (tuned for fast retrieval, for reports)

- auditing (tracking changes made to the first database)

Such separation allowed us to maintain high performance for data entry and reporting, and as a positive side effect this design also helped us to improved our disaster recovery procedures. For example if one database crashes the other two are not affected and may continue operating. On top of this we also have multiple hardware and software redundancies, such as back ups, transaction log shipping, extra server and HDD mirroring. At times it is easier to restore a single incorrect user operation from one of the two extra databases.

Constant change requests early on in the project forced me to use code generation techniques.

For SQL Server it was pretty straight forward as T-SQL allows to walk the structure of the database. So all the triggers, many stored procedures and two extra databases were automatically generated by a piece of code which was much smaller. This allowed me to rapidly introduce structural changes and generate about 50K lines of code in couple of minutes. It also improved robustness as code was tested and evaluated very frequently while it’s foot print was very small.

For C# generation I had to use Text Templating Transformation Toolkit (T4) provided by Microsoft as part of Visual Studio IDE. Microsoft was already using a script for it’s LINQ to SQL classes and I decided to use it as a base example to generate view model (business layer) for the application. It turned out to be very helpful. Now it generates about 80K lines of code for all the tables, views and functions in data model.

Here is a list of several screen shots from the application.

Application Release Notes

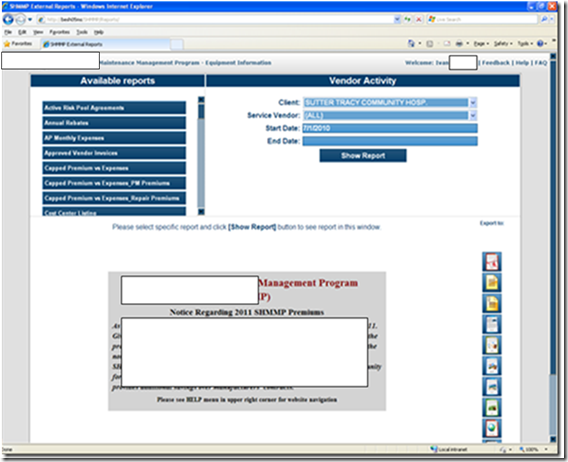

Users have the ability to change Welcome message, which appears on the reports web site.

Web reports web site is auto generated as well. Once report is designed in SQL Server Report Designed it is dropped into Report Server, then using SHMMP Manager authorized users decide who may see what reports and what data on the reports. An external user who received permissions to view particular datasets and reports will see it appear in available reports section.

All report parameters are automatically generated based on the XML structure of the selected report.

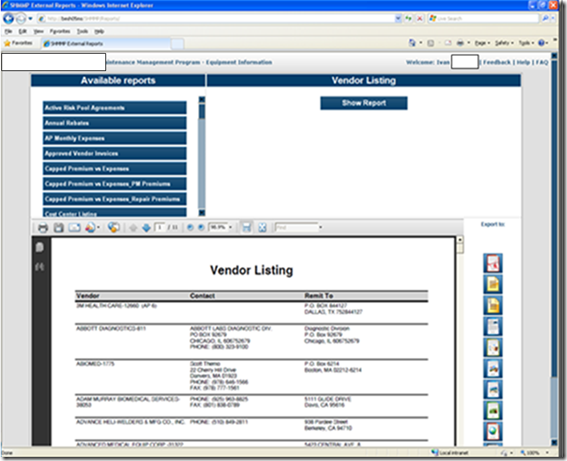

An example of generated report.

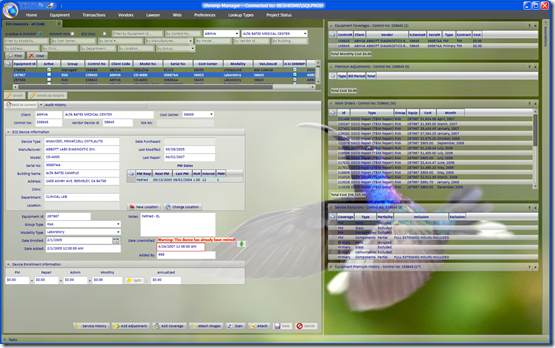

An example of data entry form with error validations. There are two validation pipeline in the application: Errors and warnings. Errors prevent changes to the database, while warnings notify users of a potential mistake.

Users may change multiple settings in the application, such as scanner settings, or size of the font and forms.

Or a background picture.

But the most complicated piece of the application is data mining. Users may see and navigated to associated records within database thus uncovering problems or learning data easily.

Plus some fun animations throughout the app to keep users’ attention :)

In the next post I’ll dive deeper to cover some of the trouble points in developing MVVM apps and my personal goals for the project.

No comments:

Post a Comment